Introduction

In previous labs throughout the semester the imagery used has been in a format called nadir. Which is when the camera is at a position that it is pointing directly down, meaning the location of the images is above the target. A camera pointing to the nadir direction means that it is vertical or perpendicular to the ground. Nadir imagery is used for creating orthomosaics and digital surface models using Pix4D.

In this lab, the aerial images used that were were oblique imagery. Oblique imagery is aerial photography that is taken at a 45 degree angle from the ground or the object that is the subject of the flight. The 45 degree angle enables users to be able to measure the entire object, rather than just the top of the object as in nadir imagery. Using oblique imagery is applicable in the geospatial market because it allows for the creation of 3D models and it allows for measurements of the sides of structures along with the top. Oblique imagery is used in urban planning, crisis management, and it also has a variety of other applications.

Methods

The purpose of this lab is to use a process called annotation in Pix4D to get the fundamentals regarding processing and correcting oblique imagery. Image annotation is used to remove all the discrepancies around an object so that the subject of the imagery is more accurate. After the initial processing in Pix4D is complete the annotation toolbar can be accessed by clicking on the ray cloud and then selecting an image on the left hand side for annotation. Next click the pencil icon on the right hand side which will allow the user to begin annotation. Make sure the annotation method is mask, then the entire image was annotated by clicking on the areas that were not what was wanted for the 3D model. After everything by the target is annotated, click apply and repeat the process until all angle are covered. After the number of images annotated is sufficient, be sure to click the advanced tab under processing options and the use annotations tab under point cloud and mesh. Then uncheck initial processing and select the point cloud and mesh and process the data with annotations. After completion turn off the cameras and click on the triangle mesh tab, this will generate the 3D model. The same process described above was used for all three image sets.

There are three types of annotation that can be used, mask, carve, and global mask. In this lab the mask form of annotation was used. Mask annotation removes the background around the subjects. Not every image needs to be annotated because there is considerable overlap between the images. Throughout this lab three different oblique aerial image sets were used in an effort to create 3D models of each. The initial images were processing with and without the annotation to see if there were significant differences.

After Pix4D is open the first image set was added, the work space was set up correctly and the camera model was changed to linear rolling shutter. From there the model that was selected was the 3D model. This is important to note because in previous labs the 3D map was used, if the 3D map is selected the processing will be very slow and there will be errors when trying to begin the annotation process. This change is done because there will be no orthomosaics or DSM's created, but rather a 3D model the shows all the angles of the subjects of the flight.

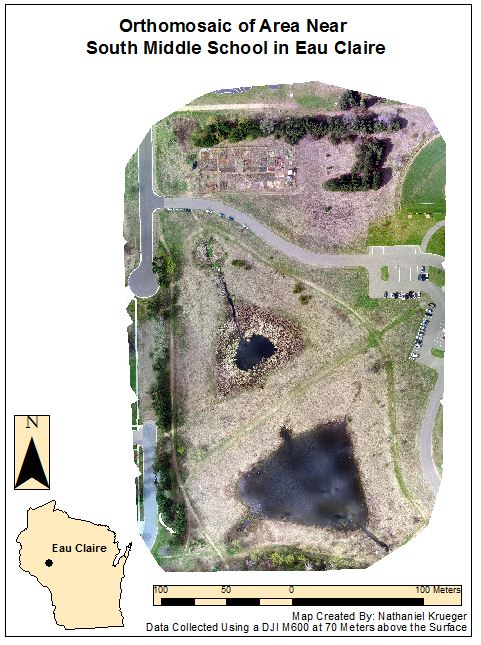

The first image set that was worked with was a bulldozer that was located at the Litchfield Mine to the southwest of Eau Claire. The second imagery set that was used was a shed that is located on the property of Eau Claire South Middle School. The third imagery set is of Professor Hupy's now retired Toyota Tundra in the parking lot near Eau Claire South Middle School parking lot. Each of these oblique imagery sets were taken by a DJI Inspire, the UAV flew in a tornado pattern around the subjects, in an effort to capture all angles of all sides. Figure 1 below shows how the drone was flew in an effort to cover all of the angles. The flight for the truck is shown, though the other two flights looked much the same. Each image set will be described in more detail below.

|

| Figure 1: Flight Pattern |

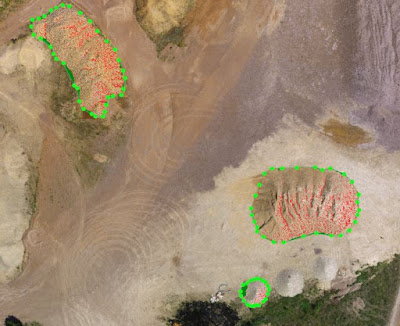

Bulldozer

Figure 2 below shows an annotated image of the bulldozer at the Litchfield mine. It is easy to see all of the little spots that are indented on the bulldozer, these are hard areas to get accurately, though as shown below it can be done.

|

| Figure 2 |

Shed at South Middle School

Figure 3 below shows an annotated image of the shed at south middle school. The white dashes at the bottom of the image can be tough to get so be sure to zoom in, also the shadow on the left of the image need to be done carefully, as the software may consider the shadowed part of the building the same as the shadow on the ground.

|

| Figure 3 |

Pickup Truck

Figure 4 below shows an annotated image of the Toyota Tundra that was done in a parking lot. Make note of how hard the tires are to annotate and be sure they are done accurately, also be sure the bumper and mirrors are not selected as they are important to making an accurate 3D model.

|

| Figure 4 |

The rematch and optimize tool was used on each of the data sets to see if that would better the results. The conclusion that made was that the rematch and optimize is not necessary as there was no notable improvement. Also this process can take some time depending on the size of the image set, so there was no need for the wasted time with this additional step.

After spending many hours doing the annotation process a few tips can be offered. Making circles is an effective way to cover a lot of ground. Also clicking and holding the cursor means the annotation process in continuous. Start at the outside of the image and work in, be careful not to get to close to the object when holding the mouse because a large part of the object could get selected and then the eraser will have to be used.

Results

Below are screen captures that show multiple angles of each of the image sets. Each one will be discussed in more detail individually. Ultimately each image set still had flaws from the background of the images, and that will be discussed below. In these setting the image annotation was still morphed from the background, though the tool set of being able to eliminate the backgrounds of images for 3D model creation is an essential tool to have. Also one of the first things to be done right before annotation is to increase the size of the box used for annotating. This helps creates larger pixels and makes the process go much quicker.

Bulldozer

The bulldozer was by far the most challenged image set to annotate. There was a lot of nooks and crannies that are very challenging to annotate correctly. This was the first set that was done, and that was done because this was the trial run to get a feel for how things worked. Five images were annotated here. Figures 5-7 are the output images after the annotation and figures 8 and 9 show the bulldozer with no annotation. The differences are not notable, even on closer inspection, zoomed very far in there are minuscule differences at best. This is unfortunate to see due to the time that was spent annotating.

|

| Figure 5: Annotated |

|

| Figure 6: Annotated |

|

| Figure 7: Annotated |

|

| Figure 8: Not Annotated |

|

| Figure 9: Not Annotated |

Shed at South Middle School

The shed at south middle school went quite a bit smoother and faster than the other two data sets. the shadows that were cast caused a few issues, and also the lines on the track and the fence posts were hard to get at some points, that can be remedied by zooming in, but not zooming in so far that the software breaks up the smaller areas into tiny pixels. For this image set 15 images were annotated because the process went very quickly. Along with increased annotation, the rematch and optimize was done to see if the output quality would be better. By doing more images, one would believe the quality would increase, again, unfortunately there was not a lot of improvement from the images with no annotation. One difference that can be noted is that were is less of a pix elated look on the annotated image. Though the there is still the error on the peak of the shed. Also the sides are a little more clear on the annotated image.

|

| Figure 10: Annotated |

|

| Figure 11: Annotated |

|

| Figure 12: Annotated |

|

| Figure 13: Not Annotated |

|

| Figure 14: Not Annotated |

Pickup Truck

Annotated the Toyota Tundra was slightly more difficult than the shed at south middle school but not nearly as tough as the bulldozer. The mirrors and fender flares caused some issues as the software had a difficult time differentiating between the ground and those objects, also the bumper kept wanting to be selected when it was not supposed to be. Again, just the same as the two previous data sets there was not a notable difference from the image set that was annotated and the one that was not.

|

| Figure 15: Annotated |

|

| Figure 16: Annotated |

|

| Figure 17: Annotated |

|

| Figure 18: Not Annotated |

|

| Figure 19: Not Annotated |

Conclusion

After completion of this lab it would be easy to assume that annotation of oblique imagery is not necessary, though most of the time it is. All in all it certainly does not hurt the quality of the data set and any improvement of data quality that can be done should be. Oblique imagery does a nice job of creating a 3D model of structures. After completing this exercise it would be recommended that if a large image set needs to be annotated the job could be outsourced to a different company. This is due to the large amount of time that it takes to do the annotations and the fact that it can be very stressful when things get annotated that were not intended to get annotated. Other issues with annotation were the shadows, clouds, and little objects such as the dashes on the track at south middle school, the mirrors and fender flares on the truck, and most of the dozer because there was not many straight lines, but rather a considerable amount of intents that had to be dealt with. When annotating it was found that it is important to do images from each angle around the subject being annotated. Doing this will cut down on the number of images that need to be annotated because there will be alot of overlap with other images if the angles selected are correct. Also for whatever reason some images just do not cooperate, if an image is being hard to annotate it is recommended to just try a new image.

Sources

https://pix4d.com/support/