Introduction

Pix4D is used to change hundreds or even thousands of images taken from a Unmanned Aerial Vehicle to a geo referenced 2D or 3D display. This software aids in the construction of a point cloud data set, true orthomosaics and a digital surface model. This software can take skewed aerial images and change it to extremely accurate georeferenced mosaic. Images from aircraft can be uploaded as well, it is not strictly limited to just UAV. The images that will be used in this lab were collected by Joseph Hupy using a DJI Phantom 30 in 2016. The drone was flew over the Litchfield mine which is southwest of the city of Eau Claire, there are two data sets that will be getting used to complete this lab. Pix4D helps to generate maps from the aerial images, from there the maps can be disccused and dissected. Pix4D is a very powerful software that does have many applications and it can be used in many different settings. In an effort to understand what makes data qualified to be used in this setting a few questions must be answered first and they are displayed below. These questions help the Pix4D user understand how the UAV should be flown and also how to maintain data quality. This lab focuses on building maps with Pix4D, it is widely accepted as easy to use and it is the top of the line software. For this exercise there will be no GCP's (ground control points) used, and also there will be no oblique imagery, though the software does have the capabilities.

What is the overlap needed for

Pix4D to process imagery?

As a general standard there should be at least 75% frontal overlap in the flight direction and at least 60% side overlap between flying tracks. Also a constant height over the surface of the terrain helps to ensure data quality. There are exceptions to overlap, in densely vegetated areas there should be a 85% frontal overlap at least 70% side overlap, increased flight height can also help to make the aerial images appear not as distorted. An increased overlap and increased height ensures that the data will represent the terrain correctly.

What if the user is flying over sand/snow, or uniform fields?

When flying over flat terrain with agriculture the user should have overlap of at least 85% frontal and at least 70% from the side and again flying higher can help improve the quality. When looking at unique cases such as sand or snow the user should have at least 85% frontal overlap and at least 70% side overlap. Also the exposure settings should be manipulated in order to have as much contrast as possible. Furthermore oceans are impossible to reconstruct because there is no land features. When flying over rivers or lakes the drone should be flew higher in order to capture as many land features as possible.

What is Rapid Check?

Rapid check is made for use in the field, it can verify the correct areas of coverage and ensure that the data collection was sufficient. Rapid check is inside of Pix4D, it is not a stand alone software. The one downside of rapid check is that it processes the data so rapidly that it can be inaccurate. Rapid check should be used as a preview of the data in the field and the data should still be imported in the office when more time is available.

Can Pix4D process oblique images? What type of data do you need if

so?

Yes Pix4D can process oblique images, there needs to be many different angles and images of the oblique image in order to produce a quality data set. An oblique image is one that is taken when the camera is not straight up and down with the ground or the object. It is possible to combine oblique imagery with other kinds, for these cases there must be more overlap and it is recommended to use ground control points. According to the Pix4D site there should be an image taken every 5 to 15 degrees in order to make sure that there is a sufficient amount of overlap.

Can Pix4D process multiple flights? What does the pilot need to

maintain if so?

Yes Pix4D can process multiple flights, again the operator needs to ensure that there is enough overlap between the images taken in the flights. When processing multiple flights it is important that the conditions were the same or at least nearly the same. To clarify there should be about the same cloud cover, the sun position should be taken into consideration and the overall weather will also play a role.

Are GCPs necessary for Pix4D? When are they highly recommended?

Ground control points are not necessary for Pix4D, as long as there is adequate overlap there should not be any issues with flights that were taken perpendicular to the ground. If there is no image geo-location then the operator is strongly urged to use GCP's. Oblique aerial imagery can pose a few issues, when using oblique flight data there should be GCP's because they will help ensure that there was adequate overlap and that data integrity was not compromised.

What is the quality report?

A quality report will be displayed after every step of processing. It will tell you if the processing failed or if it was completed. The report will tell the user if the data is ready to be worked with. The quality report runs a diagnostic on the images, dataset, camera optimization, matching and geo-referencing. This is essential because it makes sure the images have the correct amount of key points and ensures the image has been calibrated.

Methods

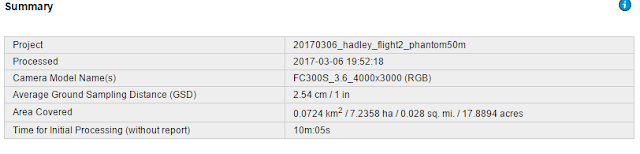

The first step in this lab is to open Pix4D and start a new project. From here the project was named in a way that it can be told apart from other assignments. The numbers represent the year, followed by the month and lastly the day on which the project was started. Additionally the location the drone was flew and the type of drone and the height at which it was flown are all included in the name of the file. The name of the file ended up being 20170306_hadley_phantom50m, and this accounted for all of the information discussed above. Figure 1 below shows how and where the data was saved.

|

| Figure 1 |

|

| Figure 2 |

From here the images were added from a folder that was provided by professor Joseph Hupy. The images from both flight 1 and flight 2 were added, though they were added separately in an effort to not bog down the computer.There was 68 images added from flight 1 and 87 images added from flight 2. Figure 2 above shows what the images appeared as after adding them from flight 1.

Once the images were added it is important to take notice of the Coordinate system, though the default was used for this exercise it could have been changed. Also if the user ends up not being happy with the coordinate system once the data is in ArcGIS it can be changed. Next, under selected camera model in the edit tab it is important to make some changes. For whatever reason the Pix4D program has the Phantom 3 as being a global shutter, when in reality it is a Linear Rolling Shutter. All of the other camera specifications are correct.

After clicking next there are options to change the processing options and the 3D map option was selected. Selecting the 3D map option means that Pix4D will create a Digital Surface Model (DSM). After selecting the 3D model and clicking finish, the map view will then be brought up. This gives the user a general idea of what the flight looked like. From here be sure to uncheck the boxes next to "point cloud and mesh" and the "DSM, Orthomosaic and Index." This is done so that it does not take hours to complete. Then by going into processing options in the lower left corner there are a series of processing options that can be changed to improve quality and speed. Under the "DSM, orthomosaic and index" tab the method was changed to triangulation. From past experiences this is the best option to select. From here the initial processing can be started. Once the initial processing is complete make sure the quality report is correct. Next, uncheck box 1 that says initial processing and select boxes 2 and 3 and process it again and again make sure the quality report is correct.

|

| Figure 3 |

Figure 3 above illustrates the steps discussed in the paragraph above. The number 2 and 3 boxes were unchecked to add in timely processing. At the time the screenshot was taken the software had just started running, it was only 5% complete with the first of 8 tasks. Figure 4 below shows the second time the data was ran for flight one, this time box 1 was unchecked and boxes 2 and 3 were selected.

|

| Figure 4 |

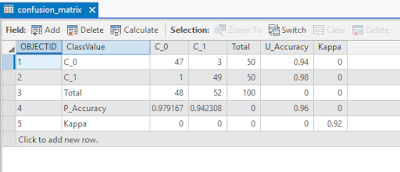

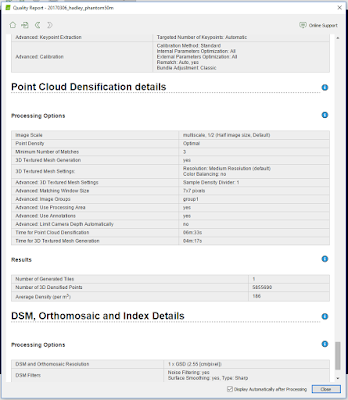

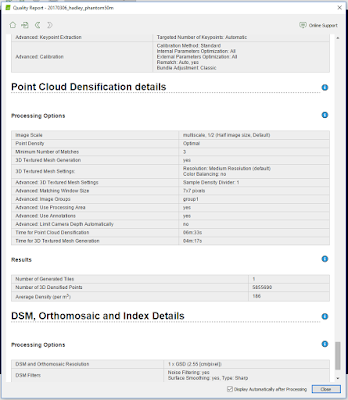

The quality report gives information on the accuracy and quality of the data, this is essential because it will tell the user if there were any errors in the data. The quality report shows a summary of the data, a quality check and also displays a map of the overlap. This shows that much of the data should have overlap of at least 4 images and this ensures the accuracy. Once flight 1 and flight 2 are both completed the images are now ready to be made into maps using ArcMap. The quality report is displayed below in figure 5. There is more to the quality report than what is displayed in the screenshot below, as the quality report is fairly lengthy.

|

| Figure 5 |

Figure 6 below is a part of the quality check, and it ensures that all of the images were calibrated and that the data was all accurate.

|

| Figure 6 |

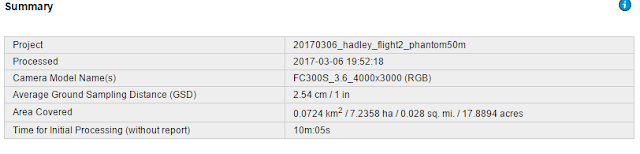

Figure 7 below shows the name of the project, when it was processed and various other information.

|

| Figure 7 |

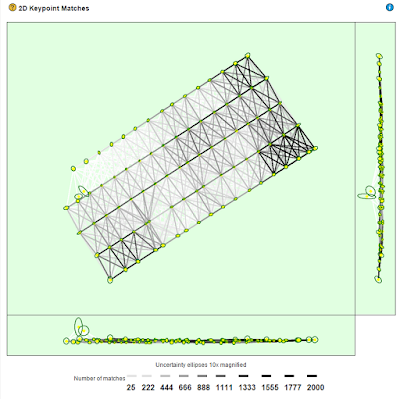

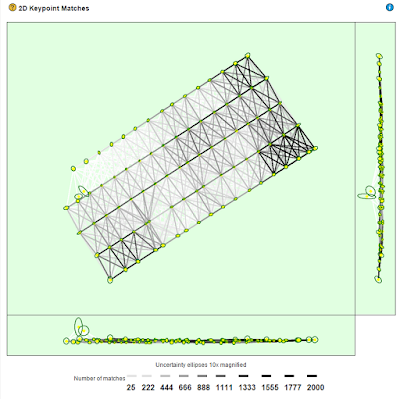

Figure 8 below displays the number of 2D keypoint matches. This also gives an idea of how accurate the data was and which areas may be slightly more accurate.

|

| Figure 8 |

Results

After the processing was completed a video was constructed using Pix4D in order to give the viewer an idea of the flight area. This does a fantastic job of giving a visual reference to the viewers. There is a lot of detail in the video due to the high resolution. A link for the video is posted below and the video is available to be viewed on youtube.

https://youtu.be/KRKbkdaLwUk

Figure 9 is a screenshot of the area after the data was processed. It is extremely high resolution and it came out very nicely. This view was achieved by unselecting the camera, and then selecting the triangle mesh tab.

|

| Figure 9 |

After completion in Pix4D the data was then brought into ArcMap so a few maps could be made. The maps are displayed below as denoted by figure 10 and figure 11.

|

| Figure 10 |

Figure 10 above is a Digital surface model, overlayed with a hillshade of the Litchfield mine. The piles of sand are clearly visible by the bright red and the roads are depicted by yellow. This is an interesting map of something such as a mine because there are drastic elevation changes, much like the first sandbox activity that was completed this semester.

Figure 11 below is a orthomosaic of the Litchfield mine that is located southwest of Eau Claire. The orthomosaic does a good job of showing what the mine is made up of. There are sand piles on the West side of the map and there is some vegetation more to the east. The main road runs in from the southeast and splits either left or right of the large sand pile that is located in the center.

|

| Figure 11 |

Conclusion

As a final critique, Pix4D is a very good software for processing UAS data, it is very user friendly and it projected the data at a high resolution and was very aesthetically pleasing. Having never used the program before it only took the general outlined instructions provided in the powerpoint to be able to process data with Pix4D.

Sources

Pix4D Support

https://support.pix4d.com/hc/en-us/community/posts/203318109-Rapid-Check#gsc.tab=0